2) SIB 1

3) SIB 2

4) RRC : PRACH Preamble

5) RRC : RACH Response

6) RRC : RRC Connection Request

7) RRC : RRC Connection Setup

8) RRC : RRC Connection Setup Complete + NAS : Attach Request

9) RRC : DL Information Transfer + NAS : Authentication Request

10) RRC : UL Information Transfer + NAS : Authentication Response

11) RRC : DL Information Transfer + NAS : Security Mode Command

12) RRC : UL Information Transfer + NAS : Security Mode Complete

13) RRC : Security Mode Command

14) RRC : Security Mode Complete

15) RRC : RRC Connection Reconfiguration + NAS : Attach Accept

16) RRC : RRC Connection Reconfiguration Complete + NAS : Attach Complete

17) RRC : RRC Connection Release

18) RRC : PRACH Preamble

19) RRC : RACH Response

20) RRC : RRC Connection Request

21) RRC : RRC Connection Setup

22) RRC : RRC Connection Setup Complete + NAS : Service Request

23) RRC : Security Mode Command

24) RRC : Security Mode Complete

25) RRC : RRC Connection Reconfiguration + NAS : Activate Dedicated EPS Bearer Context Request

26) RRC : RRC Connection Reconfiguration Complete + NAS : Activate Dedicated EPS Bearer Context Accept

27) RRC : UL Information Transfer + NAS : Deactivate Dedicated EPS Bearer Context Accept

28) RRC : RRC Connection Release

LTE Unique Sequences

Even though overall sequence is pretty similar to WCDMA sequence, there are a couple of different points comparing to WCDMA sequence.

First point you have to look at is that in LTE 'RACH Preamble' is sent as a part of RRC message. As you know RACH process was there in WCDMA, but in WCDMA it was a part of Physical layer process.

Another part I notice is that RRC Connection Setup Complete and Attach Request is carried in a single step.

These are the differences you can notice just by looking at the message type, there are more differences you will find when you go into the information elements of each messages as you will see in following sections.

Overall Comparision with WCDMA

First thing you will notice would be that there are much less SIBs being transmitted in LTE comparting to WCDMA. Of course there are more SIBs not being transmitted in this sequence (LTE has 10 SIBs in total), but with only these two SIBs it can transmit all the information to let UE camp on the network. In WCDMA there are a total 18 SIBs and in most case we used at least SIB1,3,5,7,11 even in very basic configurations. And some of the WCDMA SIBs like SIB5 and 11 has multipe segments. In LTE, number of SIB is small and none of them are segmented.

1) MIB

MIB in LTE has very minimal information (This is a big difference from WCDMA MIB) . The only information it carries are

i) BandWidth

ii) PHICH

iii) SystemFrameNumber

Of course the most important information is "BandWidth".

According to 36.331 section 5.2.1.2, the MIB scheduling is as follows :

The MIB uses a fixed schedule with a periodicity of 40 ms and repetitions made within 40 ms. The first transmission ofthe MIB is scheduled in subframe #0 of radio frames for which the SFN mod 4 = 0, and repetitions are scheduled insubframe #0 of all other radio frames.

2) SIB 1

SIB 1 in LTE contains the information like the ones in WCDMA MIB & SIB1 & SIB3. The important information on SIB 1 is

i) PLMN

ii) Tracking Area Code

iii) Cell Selection Info

iv) Frequency Band Indicator

v) Scheduling information (periodicity) of other SIBs

You may notice that LTE SIB1 is very similar to WCDMA MIB.

Especially at initial test case development, you have to be very careful about item v). If you set this value incorrectly, all the other SIBs will not be decoded by UE. And as a result, UE would not recognize the cell and show "No Service" message.

According to 36.331 section 5.2.1.2, the SIB1 scheduling is as follows :

The SystemInformationBlockType1 uses a fixed schedule with a periodicity of 80 ms and repetitions made within 80 ms.The first transmission of SystemInformationBlockType1 is scheduled in subframe #5 of radio frames for which the SFNmod 8 = 0, and repetitions are scheduled in subframe #5 of all other radio frames for which SFN mod 2 = 0.

This means that even though SIB1 periodicity is 80 ms, different copies (Redudancy version : RV) of the SIB1 is transmitted every 20ms. Meaning that at L3 you will see the SIB1 every 80 ms, but at PHY layer you will see it every 20ms. For the detailed RV assignment for each transmission, refer to 36.321 section 5.3.1 (the last part of the section)

One example of LTE SIB1 is as follows :

RRC_LTE:BCCH-DL-SCH-Message

BCCH-DL-SCH-Message ::= SEQUENCE

+-message ::= CHOICE [c1]

+-c1 ::= CHOICE [systemInformationBlockType1]

+-systemInformationBlockType1 ::= SEQUENCE [000]

+-cellAccessRelatedInfo ::= SEQUENCE [0]

+-plmn-IdentityList ::= SEQUENCE OF SIZE(1..6) [1]

+-PLMN-IdentityInfo ::= SEQUENCE

+-plmn-Identity ::= SEQUENCE [1]

+-mcc ::= SEQUENCE OF SIZE(3) OPTIONAL:Exist

+-MCC-MNC-Digit ::= INTEGER (0..9) [0]

+-MCC-MNC-Digit ::= INTEGER (0..9) [0]

+-MCC-MNC-Digit ::= INTEGER (0..9) [1]

+-mnc ::= SEQUENCE OF SIZE(2..3) [2]

+-MCC-MNC-Digit ::= INTEGER (0..9) [0]

+-MCC-MNC-Digit ::= INTEGER (0..9) [1]

+-cellReservedForOperatorUse ::= ENUMERATED [notReserved]

+-trackingAreaCode ::= BIT STRING SIZE(16) [0000000000000001]

+-cellIdentity ::= BIT STRING SIZE(28) [0000000000000000000100000000]

+-cellBarred ::= ENUMERATED [notBarred]

+-intraFreqReselection ::= ENUMERATED [notAllowed]

+-csg-Indication ::= BOOLEAN [FALSE]

+-csg-Identity ::= BIT STRING OPTIONAL:Omit

+-cellSelectionInfo ::= SEQUENCE [0]

+-q-RxLevMin ::= INTEGER (-70..-22) [-53]

+-q-RxLevMinOffset ::= INTEGER OPTIONAL:Omit

+-p-Max ::= INTEGER OPTIONAL:Omit

+-freqBandIndicator ::= INTEGER (1..64) [7]

+-schedulingInfoList ::= SEQUENCE OF SIZE(1..maxSI-Message[32]) [2]

+-SchedulingInfo ::= SEQUENCE

+-si-Periodicity ::= ENUMERATED [rf8]

+-sib-MappingInfo ::= SEQUENCE OF SIZE(0..maxSIB-1[31]) [0]

+-SchedulingInfo ::= SEQUENCE

+-si-Periodicity ::= ENUMERATED [rf8]

+-sib-MappingInfo ::= SEQUENCE OF SIZE(0..maxSIB-1[31]) [1]

+-SIB-Type ::= ENUMERATED [sibType3]

+-tdd-Config ::= SEQUENCE OPTIONAL:Omit

+-si-WindowLength ::= ENUMERATED [ms20]

+-systemInfoValueTag ::= INTEGER (0..31) [0]

+-nonCriticalExtension ::= SEQUENCE OPTIONAL:Omit

3) SIB 2

The important information on SIB2 is

i) RACH Configuration

ii) bcch, pcch, pdsch, pusch, pucch configuration

iii) sounding RS Configuration

iv) UE Timers

Following is one example of SIB2. I looks to me that LTE SIB2 is similar to WCDMA SIB5 configuring various common channel.

Ver:8,0,0,18RRC_LTE:BCCH-DL-SCH-Message

BCCH-DL-SCH-Message ::= SEQUENCE

+-message ::= CHOICE [c1]

+-c1 ::= CHOICE [systemInformation]

+-systemInformation ::= SEQUENCE

+-criticalExtensions ::= CHOICE [systemInformation-r8]

+-systemInformation-r8 ::= SEQUENCE [0]

+-sib-TypeAndInfo ::= SEQUENCE OF SIZE(1..maxSIB[32]) [1]

+- ::= CHOICE [sib2]

+-sib2 ::= SEQUENCE [00]

+-ac-BarringInfo ::= SEQUENCE OPTIONAL:Omit

+-radioResourceConfigCommon ::= SEQUENCE

+-rach-Config ::= SEQUENCE

+-preambleInfo ::= SEQUENCE [0]

+-numberOfRA-Preambles ::= ENUMERATED [n52]

+-preamblesGroupAConfig ::= SEQUENCE OPTIONAL:Omit

+-powerRampingParameters ::= SEQUENCE

+-powerRampingStep ::= ENUMERATED [dB2]

+-preambleInitialReceivedTargetPower ::= ENUMERATED [dBm-104]

+-ra-SupervisionInfo ::= SEQUENCE

+-preambleTransMax ::= ENUMERATED [n6]

+-ra-ResponseWindowSize ::= ENUMERATED [sf10]

+-mac-ContentionResolutionTimer ::= ENUMERATED [sf48]

+-maxHARQ-Msg3Tx ::= INTEGER (1..8) [4]

+-bcch-Config ::= SEQUENCE

+-modificationPeriodCoeff ::= ENUMERATED [n4]

+-pcch-Config ::= SEQUENCE

+-defaultPagingCycle ::= ENUMERATED [rf128]

+-nB ::= ENUMERATED [oneT]

+-prach-Config ::= SEQUENCE

+-rootSequenceIndex ::= INTEGER (0..837) [22]

+-prach-ConfigInfo ::= SEQUENCE

+-prach-ConfigIndex ::= INTEGER (0..63) [3]

+-highSpeedFlag ::= BOOLEAN [FALSE]

+-zeroCorrelationZoneConfig ::= INTEGER (0..15) [5]

+-prach-FreqOffset ::= INTEGER (0..94) [2]

+-pdsch-Config ::= SEQUENCE

+-referenceSignalPower ::= INTEGER (-60..50) [18]

+-p-b ::= INTEGER (0..3) [0]

+-pusch-Config ::= SEQUENCE

+-pusch-ConfigBasic ::= SEQUENCE

+-n-SB ::= INTEGER (1..4) [1]

+-hoppingMode ::= ENUMERATED [interSubFrame]

+-pusch-HoppingOffset ::= INTEGER (0..98) [4]

+-enable64QAM ::= BOOLEAN [FALSE]

+-ul-ReferenceSignalsPUSCH ::= SEQUENCE

+-groupHoppingEnabled ::= BOOLEAN [TRUE]

+-groupAssignmentPUSCH ::= INTEGER (0..29) [0]

+-sequenceHoppingEnabled ::= BOOLEAN [FALSE]

+-cyclicShift ::= INTEGER (0..7) [0]

+-pucch-Config ::= SEQUENCE

+-deltaPUCCH-Shift ::= ENUMERATED [ds2]

+-nRB-CQI ::= INTEGER (0..98) [2]

+-nCS-AN ::= INTEGER (0..7) [6]

+-n1PUCCH-AN ::= INTEGER (0..2047) [0]

+-soundingRS-UL-Config ::= CHOICE [setup]

+-setup ::= SEQUENCE [0]

+-srs-BandwidthConfig ::= ENUMERATED [bw3]

+-srs-SubframeConfig ::= ENUMERATED [sc0]

+-ackNackSRS-SimultaneousTransmission ::= BOOLEAN [TRUE]

+-srs-MaxUpPts ::= ENUMERATED OPTIONAL:Omit

+-uplinkPowerControl ::= SEQUENCE

+-p0-NominalPUSCH ::= INTEGER (-126..24) [-85]

+-alpha ::= ENUMERATED [al08]

+-p0-NominalPUCCH ::= INTEGER (-127..-96) [-117]

+-deltaFList-PUCCH ::= SEQUENCE

+-deltaF-PUCCH-Format1 ::= ENUMERATED [deltaF0]

+-deltaF-PUCCH-Format1b ::= ENUMERATED [deltaF3]

+-deltaF-PUCCH-Format2 ::= ENUMERATED [deltaF0]

+-deltaF-PUCCH-Format2a ::= ENUMERATED [deltaF0]

+-deltaF-PUCCH-Format2b ::= ENUMERATED [deltaF0]

+-deltaPreambleMsg3 ::= INTEGER (-1..6) [4]

+-ul-CyclicPrefixLength ::= ENUMERATED [len1]

+-ue-TimersAndConstants ::= SEQUENCE

+-t300 ::= ENUMERATED [ms1000]

+-t301 ::= ENUMERATED [ms1000]

+-t310 ::= ENUMERATED [ms1000]

+-n310 ::= ENUMERATED [n1]

+-t311 ::= ENUMERATED [ms1000]

+-n311 ::= ENUMERATED [n1]

+-freqInfo ::= SEQUENCE [00]

+-ul-CarrierFreq ::= INTEGER OPTIONAL:Omit

+-ul-Bandwidth ::= ENUMERATED OPTIONAL:Omit

+-additionalSpectrumEmission ::= INTEGER (1..32) [1]

+-mbsfn-SubframeConfigList ::= SEQUENCE OF OPTIONAL:Omit

+-timeAlignmentTimerCommon ::= ENUMERATED [sf750]

+-nonCriticalExtension ::= SEQUENCE OPTIONAL:Omit

4) RRC : PRACH Preamble

Text

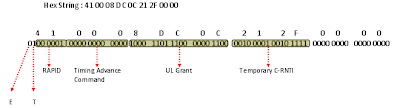

5) RRC : RACH Response

Text

6) RRC : RRC Connection Request

Text

Interim Comments

From this point on, the L3 message carries both RRC and NAS messages. So you need to have overall understanding of NAS messages as well as RRC messages.

You need to understand all the details of TS 29.274 to handle to handle data traffic related IEs in NAS message. Of course it would be impossible to understand all those details within a day.. my approach is to go through following tables as often as possible until I get some big picture in my mind. You may have to go back and forth between 36.331 and 29.274.

* Table 7.2.2-1: Information Elements in a Create Session Response

* Table 7.2.3-1: Information Elements in a Create Bearer Request

* Table 7.2.3-2: Bearer Context within Create Bearer Request

* Table 7.2.5-1: Information Elements in a Bearer Resource Command

* Table 7.2.7-1: Information Elements in a Modify Bearer Request

* Table 7.2.8-1: Information Elements in a Modify Bearer Response

* Table 7.2.9.1-1: Information Elements in a Delete Session Request

* Table 7.2.9.2-1: Information Elements in a Delete Bearer Request

* Table 7.2.10.2-1: Information Elements in Delete Bearer Response

* Table 7.3.5-1: Information Elements in a Context Request

* Table 7.3.6-2: MME/SGSN UE EPS PDN Connections within Context Response

* Table 7.3.8-1: Information Elements in an Identification Request

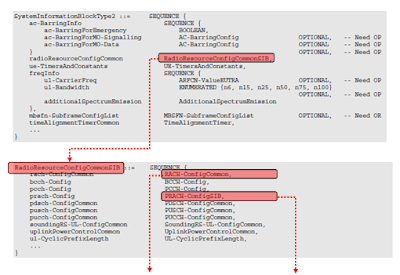

7) RRC : RRC Connection Setup

As you see in the following diagram, the most important IE (infomration element) in RRC Connection Setup message is "RadioResourceConfigDedicated" under which you can setup SRB, DRB, MAC and PHY config. Even thouth there is IEs related to DRB, in most case we setup only SRBs in RRC Connection Setup. It is similar to WCDMA RRC Connection setup message in which you usually setup only SRB (Control Channel Part) even though there is IEs for RB(Data Traffic).

One thing you have to notice is that you will find "RadioResourceCondigDedicated" IE not only in RRC Connection Setup message but also in RRC Connection Reconfiguration message. In that case, you have to be careful so that the one you set in RRC Connection Reconfig message properly match the one you set in RRC Connection Setup message. It means that you have to understand the correlation very clearly between RRC Connection Setup message and RRC Connection Reconfig message. This is also very similar to WCDMA case.

8) RRC : RRC Connection Setup Complete + NAS : Attach Request

Text

15) RRC : RRC Connection Reconfiguration + NAS : Attach Accept

An important procedure done in this step is "ESM : Activate Default EPS Bearer Context Request".

One thing you notice here is that in LTE Packet call is initiated by Network where as in UMST most of the packet call is initiated by UE. Network specifies an IP for the UE here.

16) RRC : RRC Connection Reconfiguration Complete + NAS : Attach Complete

Overal Protocol Sequence of the typical packet call is as follows : (You will notice overall sequence is very similar to WCDMA sequence)

An important procedure done in this step is "ESM : Activate Default EPS Bearer Context Accept".

25) RRC : RRC Connection Reconfiguration + NAS : Activate Dedicated EPS Bearer Context Request